If you’ve ever sifted through 500 resumes just to shortlist 15 candidates, you know that the real cost of manual hiring isn’t just time—it’s focus. Tagging applicants, updating spreadsheets, and reconciling notes across platforms gradually shift your attention away from candidate evaluation and into administrative maintenance.

Over time, this mental workload compounds into slower hiring cycles, increased error rates, and cognitive fatigue, before you’ve even begun serious assessment.

An AI candidate screening system closes that gap by automating administrative tasks, so hiring teams can focus on making smarter decisions, faster.

If you’re curious how AI screening works, where to begin, or how to implement it responsibly, this guide will walk you through the essentials.

In this article, we’ll cover:

- What AI candidate screening really means

- How AI-based screening works

- Types of AI systems and their pros and cons

- Common pitfalls and ethical concerns

- How to adopt AI screening safely (and measure effectiveness)

- What the future of AI-led screening looks like

Want to hire faster, safer, and more ethically? Learn where AI hiring is going and how to bias-proof your recruitment strategy in this 5-minute exclusive roundup with executives at Microsoft, Deloitte, True Search, and more.

What is AI candidate screening? How does it work?

AI candidate screening uses algorithms and automation to analyze, summarize, and prioritize applicants faster. For many recruiters, it is a means to support hiring decisions, never to make them.

In practice, there are two layers to screening with AI:

- Initial screening: when you need to cut through the noise of hundreds of applications and identify people who meet the basic criteria.

- Assessment or evaluation: when you go deeper, analysing communication, reasoning, and fit among qualified candidates.

Smart teams use AI across both layers to boost efficiency, accuracy, and fairness. It helps you spend less time searching—and more time engaging—with talent.

How AI-Based Candidate Screening Works

An AI-powered candidate screening process works by breaking down the most time-consuming parts of recruitment and automating what machines are best at: pattern recognition, data sorting, and summarising information at scale.

Here’s the broad workflow:

- Pre-screening: AI tools scan incoming applications or responses to identify those that meet the baseline requirements. Think of it as an intelligent triage of separating relevant profiles from the rest without discarding anyone unfairly.

- Assessment: Once candidates move forward, AI assists by analyzing responses from structured questions; spotting themes, identifying standout points, and flagging potential mismatches.

- Summarisation: Advanced tools like Willo’s convert hours of interviews into searchable transcripts and highlight key phrases, tone, or communication traits for human reviewers.

- Feedback Loops: Over time, AI learns from recruiter feedback to improve its summaries, refining what it highlights, and aligning closer to each company’s hiring style.

Under the hood, these systems rely on three core technologies:

- Machine Learning (ML): Learns patterns from past hiring data to improve future predictions.

- Natural Language Processing (NLP): Understands and interprets the language used by candidates, from written answers to spoken interviews.

- Speech Recognition and Transcription: Converts video and audio interviews into accurate text data for easy analysis.

But the operating principle here is that AI is there to help recruiters understand candidates, not eliminate them. Instead of filtering candidates out, you focus on “drawing insights in.”

Not assigning scores or pass/fail judgments but surfacing information that empowers better human decisions. This is one of the keys to exercising inclusive hiring practices.

Types Of AI Candidate Screening Systems And How They Work

Not all AI screening systems are built the same, and not all are worth your trust. Some rely heavily on keyword matching. Others are little more than automated chat forms dressed up as intelligence.

Then there are the human-centred ones, designed to help recruiters recognize potential, not just process profiles. For now, let’s unpack the main types, how they work, and where each one shines or falls short.

1. Resume parsing system

An AI-powered resume parsing system uses technology like semantic matching and deduplication to extract data from resumes, such as job titles, skills, years of experience, and rank candidates based on keyword matches.

Pros:

- Lightning-fast sorting of large applicant volumes.

- Consistent formatting for comparison.

- Easy to integrate with existing ATS tools.

Cons:

- High bias risk: if the algorithm learns from biased data, it repeats that bias.

- Keyword dependency: miss one phrase, lose the match.

- Poor predictor of soft skills, creativity, or growth potential.

Verdict:

Fast for triage, but limited for deeper evaluation. It’s the legacy approach — useful for filtering, but poor at understanding. In fact, applicants have developed several ways to bypass resume screeners, making it hard for recruiters to detect AI-generated resumes.

2. Chat or text-based pre-screening bots

Candidates interact with an AI chatbot that asks eligibility questions (“Are you authorised to work in the UK?” “Do you have X years of experience?”). The bot filters responses and builds a shortlist for recruiters.

Pros:

- Efficient for first-stage screening.

- Delivers a uniform candidate experience.

- Works across time zones with zero scheduling conflicts.

Cons:

- Shallow engagement: can’t assess communication or authenticity.

- Often too rigid — real talent can fall through the cracks.

Verdict:

Good for basic filtering, not for assessing human potential.

3. AI-powered video assessment

Candidates record answers asynchronously via video or audio. AI steps in to transcribe, summarise, and highlight themes or skills, but it doesn’t grade or score people.

Pros:

- Richer insight: tone, expression, confidence, and storytelling come through.

- More inclusive: candidates respond on their own time, anywhere in the world.

- Greater consistency: everyone answers the same structured questions.

Cons:

- Dependent on stable devices and internet access.

- Some candidates may still feel camera anxiety (solved through better UX and guidance).

4. Phone-based AI screening

Candidates answer pre-recorded or AI-asked questions over a phone call; the system records, transcribes, and summarizes responses for recruiters to review in the ATS. Some systems run an interactive voice interview and return behaviour/communication scores or ranked shortlists

Pros:

- Low friction for candidates. No app, webcam, or bandwidth-heavy video; candidates can respond any time

- Standardized early screening. Consistent question sets and machine-assisted summaries reduce interviewer variation

Cons:

- Automatic speech recognition can have higher error rates for certain accents/speech varieties, raising potential bias

- Audio answers alone rarely replace a task/assessment; you’ll still want a brief skills screen later

Best for: Early funnel triage before a short skills test or async case. Pair phone-AI with a micro-assessment to improve signal quality after the first pass.

Across these systems, the truth is that AI doesn’t have to make hiring cold. When used right, it makes hiring clearer, surfacing insights humans might miss while keeping empathy at the core of the process. That’s why it’s important to know exactly where AI helps, and where it can be a blocker.

What Are The Common Pitfalls And Ethical Concerns Of AI-Powered Candidate Screening

AI can optimize hiring beautifully, but without guardrails, it can also magnify bias at scale. Let’s break down the main pitfalls every HR leader should watch for:

1. Algorithmic bias

AI models are only as fair as the data they learn from. If past hiring data favoured certain schools, accents, or regions, the AI will mirror that prejudice — just faster and more confidently.

There is an infamous example of Amazon’s recruitment AI that “learned” to downgrade CVs with the word women’s because historical data was male-dominated.

The lesson here is that bias doesn’t vanish with automation; it scales with it.

2. Opacity and “Black Box” decisions

Many legacy AI systems can’t explain why they reject or recommend a candidate. That’s a compliance nightmare, especially under NYC Local Law 144, the Illinois AI Video Interview Act, and the EU AI Act, which classify recruitment AI as “high risk.” If your tool can’t provide audit trails or transparency reports, it’s not just risky, it’s indefensible.

3. Over-automation

Some systems make decisions entirely without human review, from scoring videos or assigning personality labels. That’s where candidate experience suffers mos.. People feel dehumanised when reduced to data points.

Recruiters, too, lose context and empathy. These are two things no algorithm can replicate. AI should inform decisions, never make them. Recruiters remain the final authority.

4. Negative candidate experience

Fully automated processes can feel impersonal, reducing opportunities for candidates to express themselves authentically. They lose the chance to express nuance — humour, empathy, passion — all the things that make us hirable humans.

Human-led systems like Willo counter this by putting empathy back in the process. Recruiters see and hear candidates in their own words, supported by AI insights, not reduced to numbers.

5. Ethical and legal gaps

With global hiring now the standard, compliance is no longer optional. Regulations like GDPR, Equal Employment Opportunity laws, and emerging AI transparency mandates are evolving quickly—and recruiters who overlook them face serious legal and reputational risks.

A responsible approach demands full transparency on how candidate data is collected, processed, and stored, alongside human oversight at every critical decision point. Candidates should also be clearly informed about when and how AI is involved in the screening process.

👉 If you’re planning to implement an AI-driven candidate screening system today, start with the following safety steps—then review the key principles that underpin responsible and successful adoption.

How to Safely Adopt AI Screening and Assessment Tools

To get real value from AI screening or assessment tools, you need a safety net: a simple but disciplined framework that keeps fairness and human judgment front and centre. Here’s the four-step playbook every HR leader should use before saying yes to any AI tool:

1. Clarify your goals

Most HR teams jump into AI because they’re drowning in applications, not because they have a clear adoption strategy. Without clear goals, you risk introducing unintended bias, candidate trust erodes, and the “time saved” promise turns into another tech headache.

Want to do it right? Start with intent, not technology. Ask:

- Are we trying to shorten hiring cycles?

- Improve candidate experience?

- Increase consistency and fairness?

To bring clarity to your goals, use a recruitment gap analysis to map your current actions against key hiring stages.

For instance, in the recruitment gap analysis template below, we divide the hiring process into seven stages, to identify where current efforts fall short and where AI can add the most value.

This clarity ensures you can pinpoint where inefficiencies, inconsistencies, or missed opportunities exist, before layering AI on top.

If you can’t define the goal, you won’t know if the AI works, and you’ll end up automating the wrong processes. Download the Willo Hiring Human report to access the full recruitment gap analysis process for free.

2. Choose the right system type

Different systems solve different problems:

If your goal is to understand candidates, not just sort them, video-first AI is your best friend. That’s why Willo uses asynchronous video — flexible, scalable, and deeply human.

3. Validate for fairness and bias

Before rolling out an AI tool, run a bias audit:

- Test with diverse candidate pools.

- Monitor demographic patterns.

- Document fairness checks (this isn’t optional under laws like NYC Local Law 144).

Ask the vendor tough questions:

- “Can I see how your model makes decisions?”

- “When was your last third-party audit?”

- “How do you handle candidate consent?”

Transparency is non-negotiable. If a vendor dodges, walk away.

4. Maintain human oversight

Humans—not AI—should always make the final call. Use it to summarise, highlight, and surface insights, not to rank or reject. Recruiters should always make the final call. That way, you guarantee faster hiring without losing the human factor.

5. Track and measure AI screening effectiveness

Implementing AI in recruitment requires tracking recruitment metrics, not just intuition. If you don’t track results, you can’t show impact or improve your process.

Key AI candidate screening metrics to track are:

- Time-to-hire (recruiter efficiency): With the right AI screening system in the loop, your time to hire should show a measurable reduction in hiring cycle time while maintaining candidate quality. Use Willo’s time-to-hire calculator to compare baseline before/after comparison of AI adoption to get the facts right.

- Completion rate: Calculate the percentage of candidates who finish the assessment process. The ideal result should indicate an increase in process quality and candidate comfort level. For instance, this national nonprofit organization increased the application completion rate to 55% (up from 33%) through AI-powered asynchronous screening.

- Candidate satisfaction: After the screening & assessment cycle, survey applicants to collect feedback on the fairness, convenience, and transparency of the process. What you learn directly impacts employer brand and offer acceptance rates.

Want to future-proof AI hiring adoption? Optimize for these 4 incoming trends

The future of AI-based hiring is about amplifying what humans do best: judgment, empathy, and connection. We’re moving from automation to augmentation, from machines that replace to machines that reinforce.

Here’s what that evolution looks like:

1. Human-centred AI will lead the pack

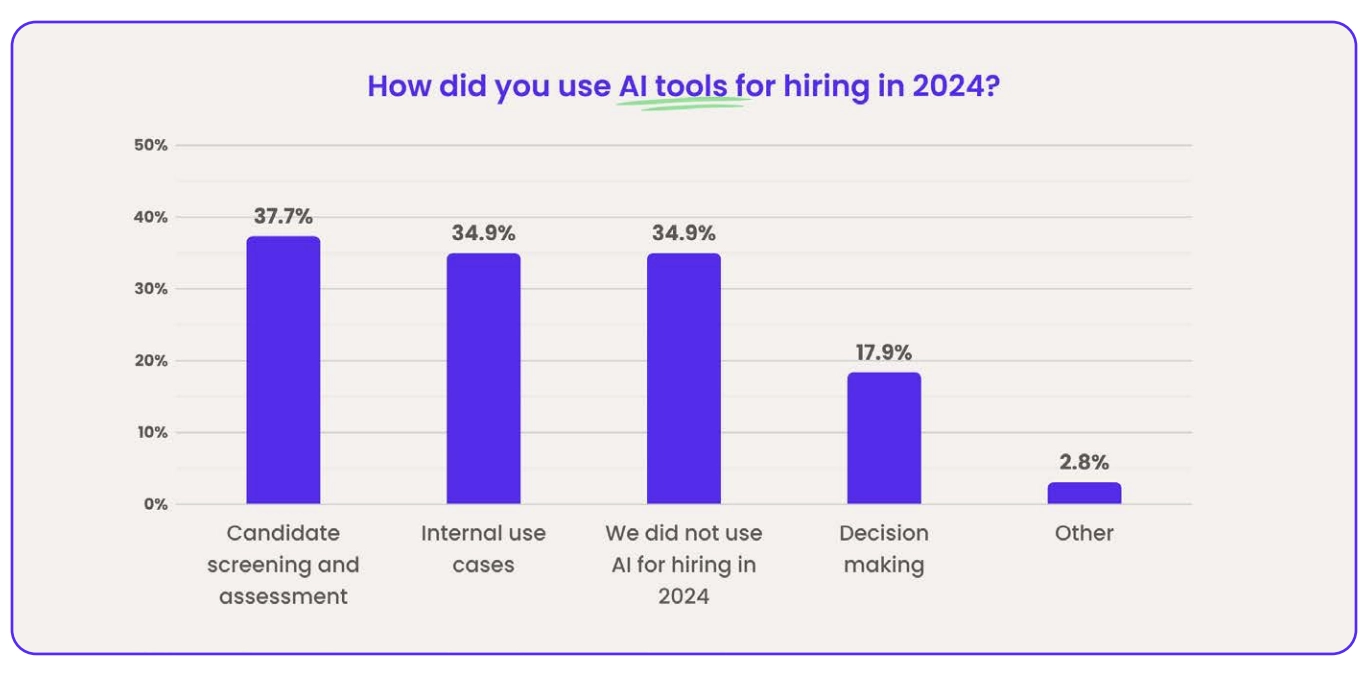

Despite its rapid growth, with a 15.32 billion projected AI recruitment spending by 2030, the majority of recruiters still don’t trust AI to make hiring decisions on their behalf. Of the 500+ talent leaders we asked, only 17% trusted AI to make hiring decisions.

They value automation for efficiency but want to retain final judgment themselves. This underlines the growing demand for human-led AI solutions, where AI can streamline pre-screening, but true assessment must remain human-guided to preserve context, fairness, and authenticity.

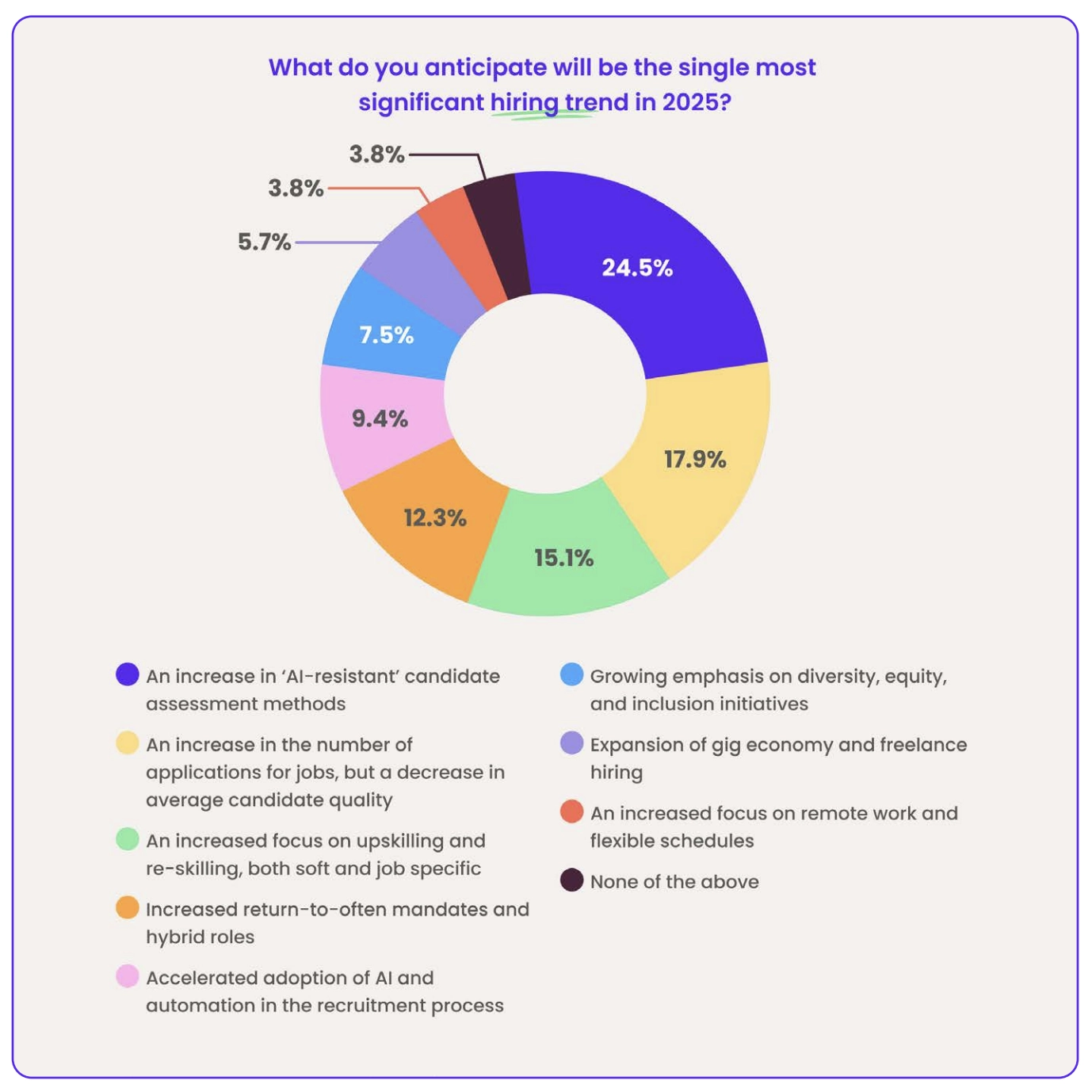

2. Increase in AI-resistant assessment approaches

With the rise of AI-generated résumés, cover letters, and interview preparation tools, candidates can now apply to far more jobs in far less time.

The downside? Many of those applications don’t truly represent the person behind them, making it harder for recruiters to gauge real skills or experience.

As application volumes climb, the overall quality of candidates often drops. To counter this, HR teams are turning to AI-resistant assessment methods such as live video interviews and real-time skill tests.

These tools help recruiters assess authenticity and communication ability—insights that no AI-generated response can reliably provide.

3. Regulations will tighten— and that’s a good thing

Compliance won’t be a checklist; it’ll be a competitive edge. Companies that demonstrate fairness, transparency, and accessibility will earn the trust of candidates. Policies such as New York City’s Local Law 144 now require an independent bias audit and notices to candidates. Non-compliance will not only carry penalties, but it compounds into bad Glassdoor reviews and legal gray zones

4. Inclusivity and accessibility will redefine the candidate experience

AI will help remove the invisible barriers that have excluded people for decades — rigid schedules, language bias, geographic limits. Video-first, asynchronous formats already empower candidates in multiple time zones, and AI translation tools are making interviews accessible in over 30 languages.